* below indicates equal contribution

Polarity-Aware Probing for Quantifying Latent Alignment in Language Models

S. Sadiekh, E. Ericheva, C. Agarwal: AAAI, 2026

Oral Acceptance

Paper | Code | HuggingFace | Video

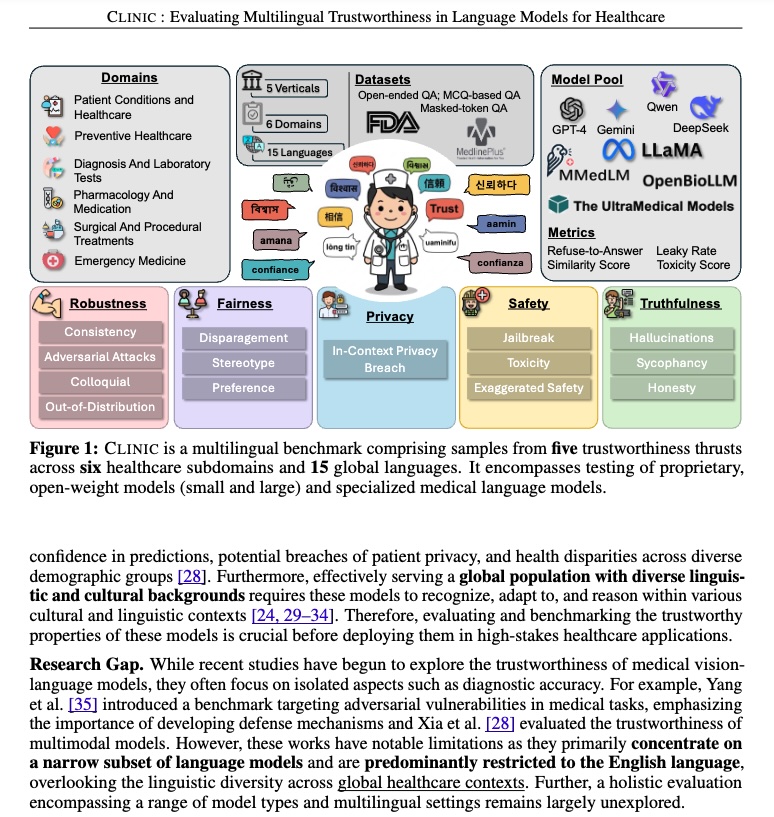

CLINIC: Evaluating Multilingual Trustworthiness in Language Models for Healthcare

A. Ghosh, S. Sridhar, RK Ravi, M. Muhsin, S. Saha, C. Agarwal: arxiv, 2025

Paper | Code | HuggingFace

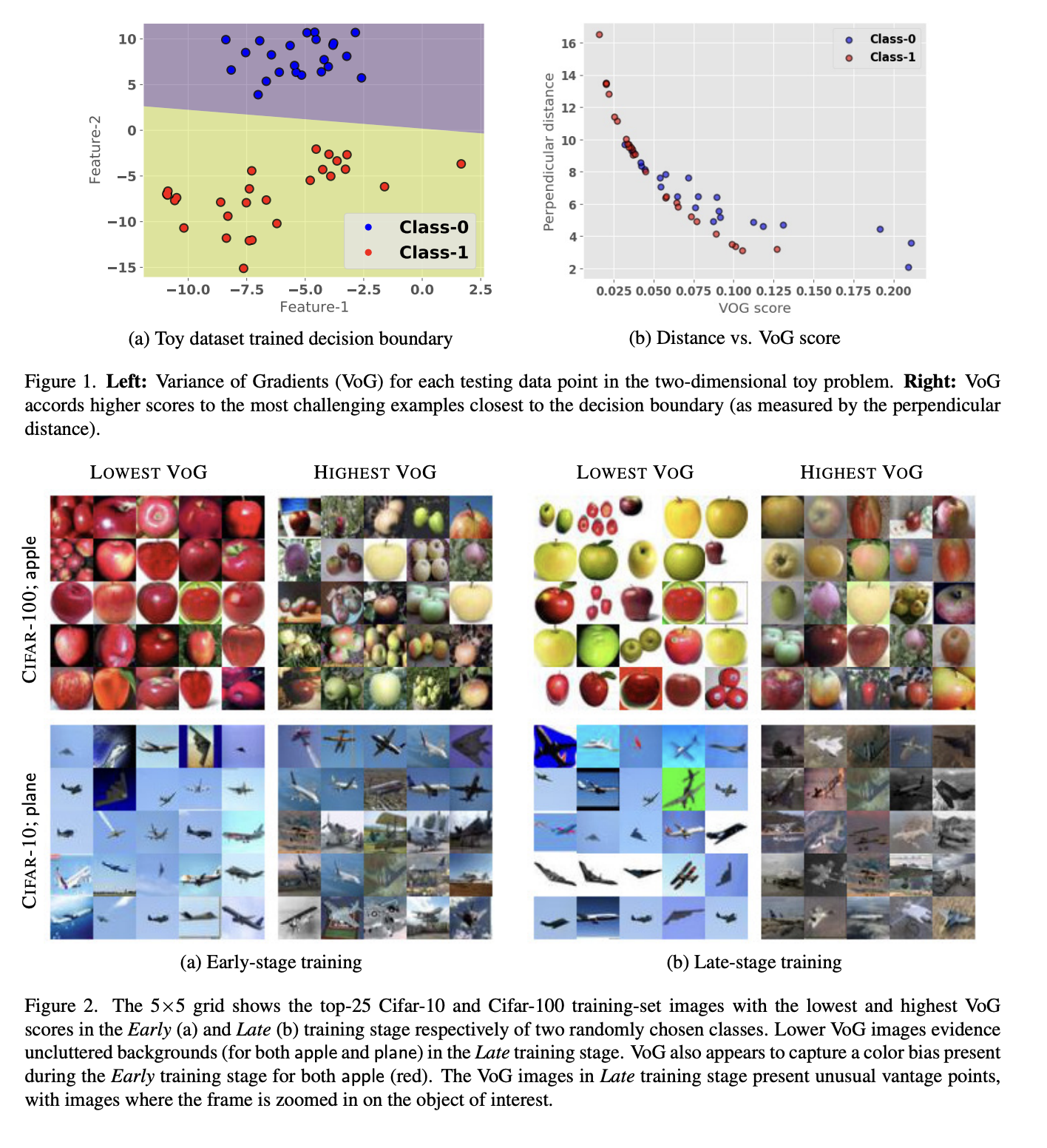

Estimating example difficulty using variance of gradients

C. Agarwal, D. D'souza, and S. Hooker: CVPR, 2022

Paper | Code | Project Website | 58 GitHub ★

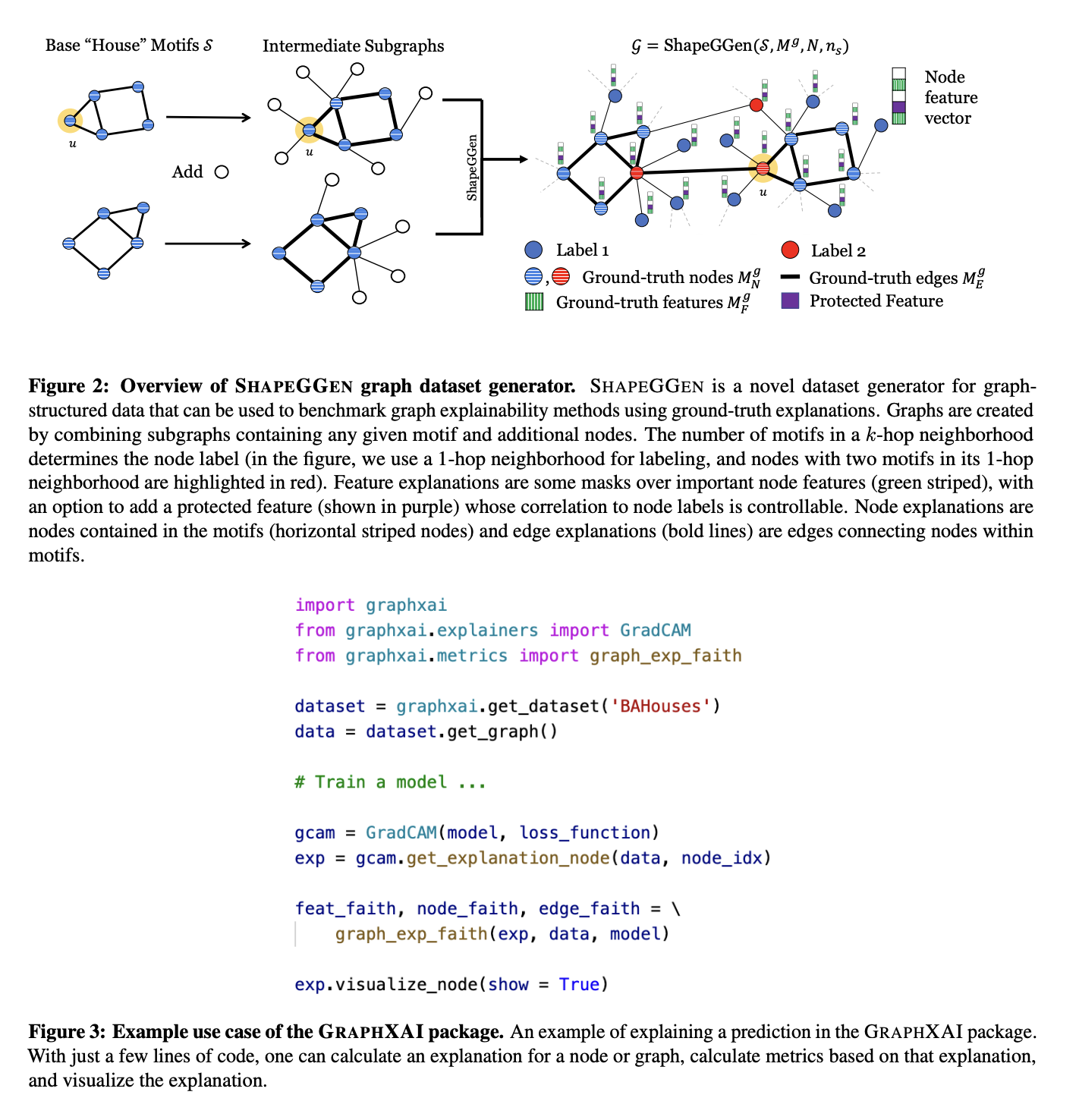

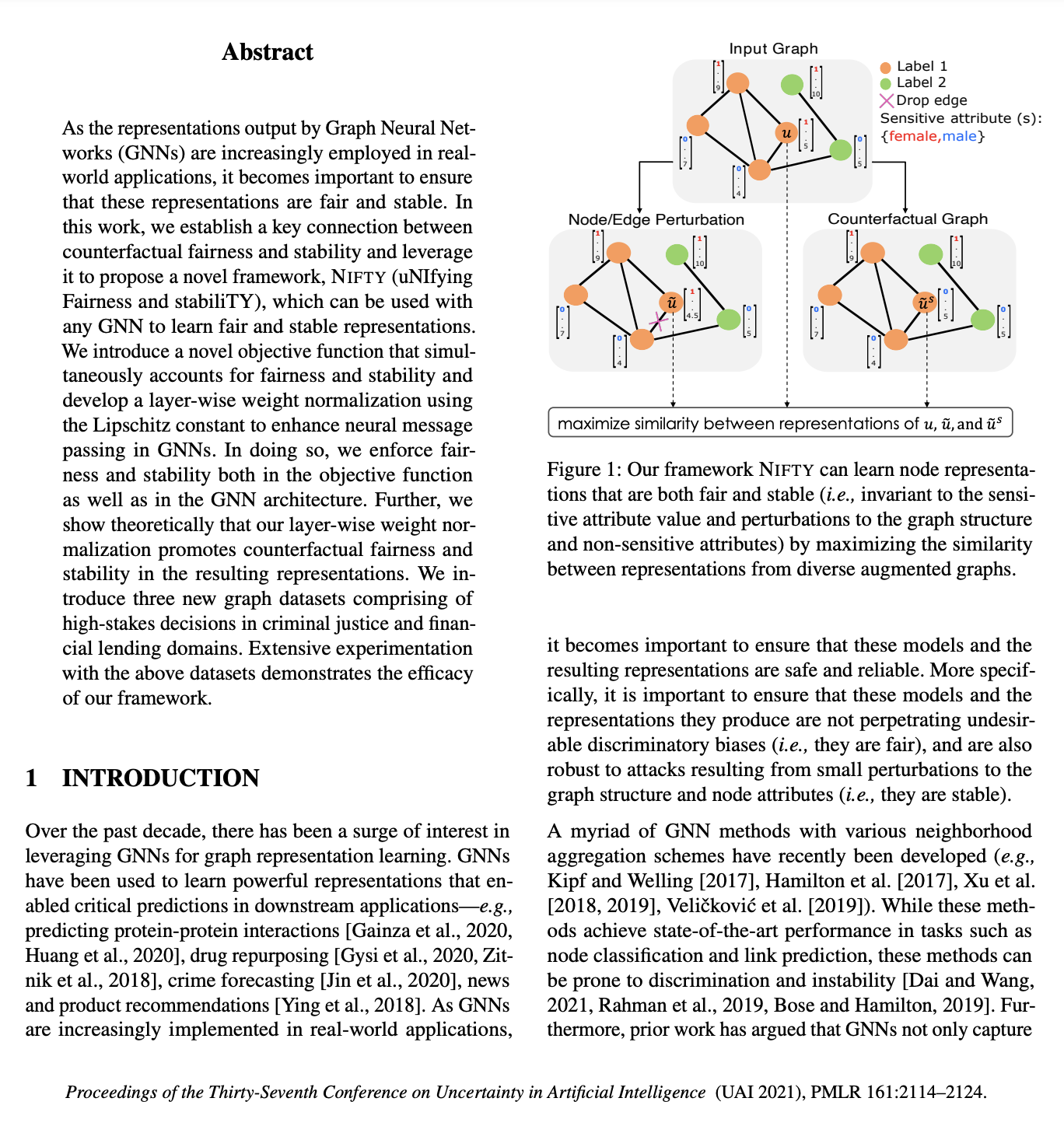

Towards a unified framework for fair and stable graph representation learning

C. Agarwal, H. Lakkaraju, and M. Zitnik: UAI, 2021

Paper | Code | 37 GitHub ★